Why the Data Analyst role has never been harder

Modern Data Stack brought new opportunities and complexities, along with new expectations. Here’s why today’s data analysts are stretched underneath the new demands.

You arrive at the office excited for the day ahead. Only a quick team catch-up, then you plan to look into the product feature adoption trends. It's the analysis you wanted to do for a while. But a few minutes after the team catch-up, you get a Slack message:

“Hey Tom, the conversion dashboard seems broken. Could you please look into it? It’s quite urgent.”

Grrrr. Ok. You head over to open a BI tool to have a look.

One of the vital pipeline conversions stands out. The trend has been going up for the last few months, and you see a drop. How do we know that it’s wrong, you wonder? Could it be genuine? After a few messages with Bob, you confirm that he is not sure either. The numbers just “seem off.”

And so you start debugging.

First, you look at the data lineage. You head over to DBT docs1 to the table queried from the dashboard. In a few clicks, you have it:

Whoah!? It has a lot of dependencies :(

Some of these tables are new, and you don’t know them all well. You message Bob: “Hey Bob, I am not sure I will know by your meeting this afternoon. I will let you know”.

And so you spend the next few hours jumping between BI tools, git to review code changes, DBT to check run results, or running queries on twenty different tables. By the end of the day, you find out what happened—the setup of Stripe changed, breaking your and many other dashboards.

“What a mess!” You shout. (Or at least you imagine doing so in your head.) This is not what you wanted to spend your day on!

If you are a Data Analyst, I bet you’ve had days like these. And while it is frustrating, it's the harsh reality of today’s data stacks.

On the surface, data looks like this:

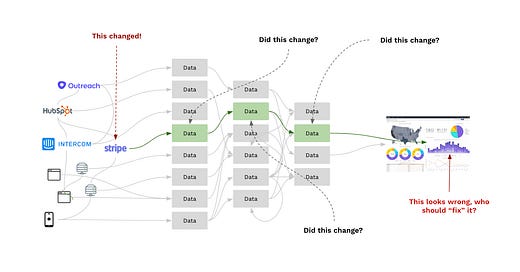

Under the hood, it looks like this:

It is an intricate maze of data tables, models, and pipelines with interconnected dependencies, which a data analyst has to navigate.

Here’s what I’ve seen:

Data is ambiguous. It’s not always 100% clear what table is the best source of data you need. Some tables are out-of-sync with the latest business definitions, and it’s often hard to determine which one is right.

Upstream changes break the models. Sometimes an unintended change in one of the upstream systems corrupts the data or deletes an entity attribute altogether. The analysts find out only retrospectively when things don’t work.

Data pipelines crash. 3rd party SaaS tools manage many data pipelines, but that doesn’t mean they don’t break. They do. And it’s not always clear why. It could be a source tool, ingest, data warehouse, or a wrong change in configuration.

Data models grow messy. As the stack grows, the SQL code accumulates. Even with the latest data modelling techniques, the messy code finds its way into critical data models. The colleague who wrote it has left the company, unable to explain their logic anymore.

And the expectations of the business always keep growing. The stakeholders expect new analysis, dashboards, reports, drop-down filters, or “quick data pulls” with increasing pace. The analyst is always one step behind the demand for the next piece of data.

The curse of complexity around the Modern Data Stack

In today’s business, on average, 110 SaaS tools2 produce data every single day.

Before the Modern Data Stack emerged, this data was siloed. A typical company didn’t have an army of engineers to build custom pipelines for every single tool. Data sitting in these tools was out of reach.

Not any more.

The tools like Fivetran3, Airbyte4 plug the majority of this data into the data warehouse.

And with access, the expectations of the business grew too. Now, we expect a fluent flow of data through the company. This means that:

Salespeople have a prioritised list of leads based on scoring, calculated using past accounts product adoption patterns.

CX reps have account health scores calculated based on every user’s engagement with all product features.

Product managers have insights from the latest A/B test weighted by LTV of accounts.

Marketing teams have an end-to-end return of ad spend measurement.

And much more.

And with the modern data stack, we can do each of them.

Modern Data Stack provided necessary technology capabilities. It opened access and processing power to work with all company data. But it also created a lot of complexity. In the middle of this is a data analyst working with a previously unseen variety of data to satisfy the growing business expectations. And the complexity is exploding.

Today’s data ecosystems are starting to match the scale of software systems. To be more precise, distributed software systems. We see many components scattered across the company operating as individual parts of distributed systems with intricate dependencies as the data flows in and out.

No wonder that Analytics Engineering is on the rise, and new roles like Analytics Engineers are being created. We’re bringing lessons learned in Software Engineering to Data to help us manage our scaling needs.

And there is more we need to do.

The missing toolbox for Data Systems

Over the past eight years, I’ve spent a lot of time working across both Software and Data. I’ve seen one crucial difference—the toolbox we have available in the software world to debug and investigate any issues is so much further ahead.

I was reminded of this last Friday.

After a few examples of degraded performance of an applications I was working on, I decided to investigate what was going on.

I opened Datadog5 to see a breakdown of all API calls made from our frontend application. With an overview of API call frequency, mean and median response times, or summary of caller components, I quickly identified what was going on. One API call was much slower than the others.

I switched to an application performance management tool (APM) to explore its traces. With the breakdown of time spent querying the database, processing results, and communicating with other subsystems, it was clear—too many slow calls to the database.

To finish the analysis, I looked into DB statistics to get the last piece of data to understand the issue.

In an hour, I wrote a report about what was causing the application to be slow—from the frontend, through the APIs, app’s internals, and database.

With the caliber of tools we have in software, we can debug vastly complex systems. Unfortunately, the experience is very different in data. We don’t have the same tools, and it is frustrating.

What the next generation of data monitoring tools looks like

And so, I am left wondering what the future could look like. The Modern Data Stack opened so many new options for working with the data, but the toolkit is a lot less fantastic. We need better tools to keep up with our ambitions to harness all our data.

Here is my (non-exhaustive) wishlist:

More testing tools. I am glad we have tools like DBT tests to assert the data, checking for many common conditions—is the data empty, within the expected range, or matching between tables. But when we’re about to hit a “Merge” button in a project with hundreds of data models, it’s still quite hard to feel safe that nothing will break. We often lack confidence. That means that we might be missing even more powerful tests.

Log-level diagnostics. When data goes wrong, it could be a failed load job, data warehouse timeout, unfinished DBT run, incorrect reverse ETL configuration, changed schema, misconfigured SQL query, failed scheduler, or many others. Fortunately, all tools produce insightful logs, but we lack the log analysis tools. The software ones are not a fit. The data world is different. We have a hierarchy of datasets, tables, and pipelines, and we want to analyze the data systems differently.

End-to-end lineage. It reveals dependencies, which are vital to trace errors to the source. We have made good progress—for example, DBT’s lineage. But it’s only part of the journey. The data flows from tools, through data ingest, to the warehouse and then back to the business. We need to map it end-to-end. Is open lineage the answer?

Performance insights. Is the slow query caused by the data warehouse or a BI tool? Is the trend improving or getting worse over the last month? Are the pipelines getting slower? By how much on average? And which ones? It’s hard to get good performance insights from across the data stack.

Better (active) error detection. When data stops working, it’s often undetected. Sometimes for quite a while, until an important meeting when someone notices that data looks “weird.”

Luckily, tooling around data quality is gaining momentum. Many companies actively work on products addressing some of the challenges I’ve outlined above. This leaves me very optimistic.

At the same time, my experience on Friday—using Datadog to diagnose a Software system—seems distant. It took Datadog 11 years to build their robust portfolio of diagnostics tools, from logs to alerting to tracing to database query performance, all very well integrated.

It will take some time for the data stack toolbox to catch up.

Until then, the next time you see a broken dashboard and a maze of complexity behind it, rest assured: it’s not just you. We all have to navigate this new ecosystem without all the tools we would like to have.

https://docs.getdbt.com/docs/building-a-dbt-project/documentation

https://www.statista.com/statistics/1233538/average-number-saas-apps-yearly/

https://fivetran.com/connectors

https://airbyte.io/connectors

https://www.datadoghq.com/

Agree 100% ! That’s why we at Keboola https://www.keboola.com/ actively work on the product addressing most of the challenges you have outlined above. We have created an API-first data platform. You can build and maintain the whole business logic in code, use our user-friendly interface or do both. It is possible to set up infrastructure as code and deploy with CI/CD pipelines.

Hi Petr, great articles! I was drawn here by a link shared by my colleague Victor on LI. Yep, totally agree with you. And as dataops manifesto says, data is different than sw as we have different personas and actually much complex ecosystem. The debug you described on SW DevOps side you can do on Keboola platform very similar way. We have full observability on top of the platform with FinOps and SecOps. We also understand that each persona need different access. So next to UI and APIs we've created whole CLI experience: https://www.keboola.com/product/cli Happy to show you, would love to get your feedback. pavel.dolezal@keboola.com or @pabu01